Data Management

Musings on rigorous data management

1. The problem with the current state of affairs

Data is beguiling. It can initially seem simple to deal with: “here I have a file, and that’s it”. However as soon as you do things with the data you’re prone to be asked tricky questions like:

- where’s the data?

- how did you process that data?

- how can I be sure I’m looking at the same data as you?

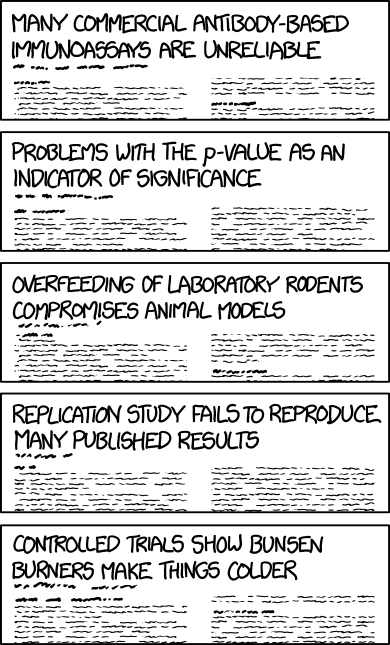

This is no small part of the replication crisis.

Further concerns arise as soon as you start dealing with large quantities of data, or computationally expensive derived data sets. For example:

- Have I already computed this data set somewhere else?

- Is my generated data up to date with its sources/dependencies?

Generic tools exist for many parts of this problem, but there are some benefits that can be realised by creating a Julia-specific system, namely:

- Having all pertinent environmental information in the data processing contained in a single Project.toml

- Improved convenience in data loading and management, compared to a generic solution

- Allowing datasets to be easily shared with a Julia package

In addition, the Julia community seems to have a strong tendency to NIH1 tools, so we may as well get ahead of this and try to make something good 😛.

1.1. Pre-existing solutions

1.1.1. DataLad

- Does a lot of things well

- Puts information on how to create data in git commit messages (bad)

- No data file specification

1.1.2. Kedro data catalog

- Has a file defining all the data (good)

- Has poor versioning

- https://kedro.readthedocs.io/en/stable/data/data_catalog.html

- Data Catalog CLI

1.1.3. Snakemake

- Workflow manager, with remote file support

- Snakemake Remote Files

- Good list of possible file locations to handle

- Drawback is that you have to specify the location you expect(S3, http, FTP, etc.)

- No data file specification

1.1.4. Nextflow

- Workflow manager, with remote file support

- Docs on files and IO

- Docs on S3

- You just call file() and nextflow figures out under the hood the protocol whether it should pull it from S3, http, FTP, or a local file. This is compared to the Snakemake remote files and is how I think DataSets.jl should expose files to users.

- No data file specification

2. Thoughts on the various facets of data management

2.1. Conceptually, what is a data set?

Simply put, a source of information.

This may sound so obvious it’s not worth saying, but it’s worth mentioning so we don’t start making assumptions like “all data sets have a file”, etc.

In building the model, it’s worth considering some common hypothetical situations, such as when the data set is:

- a file on the local disk

- a file in an s3 bucket

- a file on the web

- a connection to an actively-changing DB

- a simple value

2.1.1. Implications for the Data specification

We can split the general case of “reading data sources in Julia” into two steps:

- Acquiring a form of the data that can be worked with, e.g. a local copy of the file

- Loading a Julia representation of the data, so it may be worked with

So, this gives us three major components to a data set representation:

- metadata

- Base information about the data set (name, description, uuid, etc.)

- storage

- A description of how the data should be acquired

- loader

- A description of how the data should be loaded

Allowing for writing data too necessitates a further two components

- writer

- A description of how the data should be transformed from a Julia object into a form suitable for storage

- writer storage

- A description of how that storage-suitable form should be stored.

- This may reuse the storage used for reading the data

The nature of these five components will be explored later, but first we must explore the additional functionality we want the complete form to allow for.

2.2. Versioning

Data sources may operate under a number of different schemes:

- single release

- There is only one version of the data — e.g. the Iris data set.

- multiple (infrequent) release

- The data set has (frequently versioned) updates — e.g. ClinVar, which has monthly releases.

- multiple (frequent) release

- The data set changes rapidly, with releases frequently un-versioned — e.g. an API which proves a weather forecast for the next few days.

- in flux

- The data is near-constantly changing — e.g. a connection to a database which is being updated live.

To support reproducibility well, we should be able to provide guarantees regarding versions and data integrity. A version parameter would be useful for recording which release of the data is being used, and data integrity can be accounted for by hashing the data and recording the hash in the data specification too. In the absence of version information, recording the hash also allows for a particular instance of a data set to be unambiguously referred to.

While hashing the data has a number of clear benefits, hashing large datasets can be rather time-consuming. Looking at the xxHash homepage, we see that MD5 and SHA1 both have a bandwidth a bit under a gigabyte per second. If we were to add xxHash.jl as a dependency, it looks like we could get up to ~30 GB/s (more realistically, at this point storage retrieval will be the bottleneck: SATA3 maxes out around 0.5 GB/s, and NVMe over PCIe 4.0 x2 has a maximum of 4 GB/s). So, while always verifying the content of small (<10GB) datasets seems reasonable, if we allow for people to be hypothetically accessing multi-terabyte datasets over AWS for instance, this suddenly starts seeming like a much worse idea. Furthermore, with frequently updating (possibly un-versioned) data sets, constantly updating the hash seems like a needless complication.

Not always hashing the data isn’t too bad though, as long as we provide alternative methods of keeping track of which instance of a data set is being used. Supporting a version parameter is a good step, but for frequently updating (possibly un-versioned) data sets the date at which it is acquired could be helpful.

Let us now consider where we put data that is saved. It would be good to support multiple versions of the same data set, put each instance of a data set under a folder based on the UUID (renaming the data set doesn’t change the data after all). The file name can be selected progressively, using the best version information we have available. We may as well keep the extension so any person (or program) looking at the data has a hint to its nature, but it can be ignored for our purposes.

- $(UUID)/hash-$(hash).$(ext)

- $(UUID)/version-$(version).$(ext)

- $(UUID)/datetime-$(datetime).$(ext)

- $(UUID)/data.$(ext)

2.3. Acquiring data

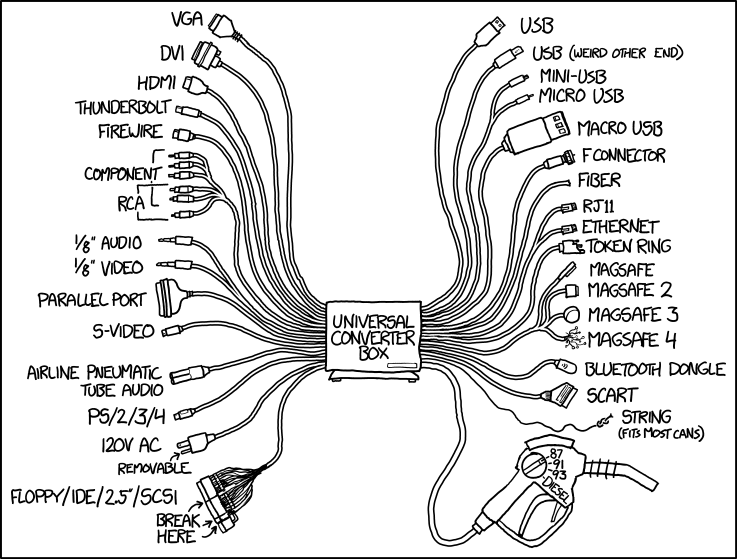

Data must be stored in a certain location. So, we must provide a way of specifying both:

- what the location of the data is — the data path

- how that location should be accessed — the storage driver

It is also possible that one may want to specify multiple storage locations for the data, for instance:

- A publically available (slow to access) copy of the data (slow, always available)

- A speedy copy available on the lab’s local storage server (fast, not always available)

In such a situation it would seem sensible to be able to provide both locations as options, but give priority to the closer data store. This would also allow mirrors for data sets to be given, improving robustness.

As mentioned earlier, “acquiring the data” can take many forms. It could be downloading a file, or opening a connection to a remote filesystem and passing a connection to a file. A single storage driver could reasonably provide multiple modes of access. We would want to provide the ability to request a particular mode of access to allow for intelligent restrictions. For instance it would make sense to just download a small file instead of opening a remote file handle, and similarly one may want to only open a remote file handle to a massive file, and never try to download it.

2.3.1. Recency

Sometimes just having some copy of data from a prescribed source isn’t good enough. Sometimes, you want it to be up to date, i.e. no older than a certain maximum age (e.g. data on the next week’s weather forcasts). Some storage drivers may always provide the latest data (for instance, a remote file handle) while others start becoming out of data the moment data is acquiring (e.g. downloading from a URL).

It would thus make sense for storage drivers to accept a recency parameter, which could simply be the maximum age of the data in seconds. Two special values should also be allowed for:

0, indicating that the data must be re-acquired every time it is accessed.-1, indicating that the data never expires.

2.4. Loading data

Acquiring the data is an excellent first step, however to actually do anything with it, we need a Julia representation we can work with. We want a solution that is a simple and convenient as possible, while allowing for the wide array of data formats, and Julia types that could reasonably represent the data.

Note that I say “types” not “type”, as there may well be multiple Julia objects

the data could be represented as (e.g. IO, Array, or DataFrame). We can embrace

this passibility by supporting multiple “loaders”, and allowing the user to

request a particular form of the data. Each loader could provide a list of types

it can provide (qualified by module, if outside of Base. and Core.), which is

then matched against the type the user is asking for when they ask for the data.

Note that we will also want to match the loader method(s) that provide that type

against the modes of access that the storage driver(s) can provide.

There is a further complication in the various different ways a file can be

parsed. For example when using CSV.read one can specify the types each column

should be read as. This necessitates the ability to provide parameters to the

loader too.

2.4.1. Named loaders

There are a number of common data formats, such as CSV, JSON, and HDF5. For such cases, we should provide (and allow the user and other packages to provide) loaders that can be easily applied to a data source.

This has already been thought of in DataSets.jl#17, with a global collection of named loaders which can be added to on the fly. In that PR each loader’s function signature takes three arguments:

- A loader object, with the name as a type parameter

- A module the loader relies on

- The input data format

For example, here’s the signature of the CSV loading function.

the loader and the required CSV module are registered with

I think we can support something a bit more general, with a usage along these lines:

julia

load(loader::Val{:csv}, source::IO, as::Type{DataFrame}; parameters::Dict{String, <:Any}=Dict(), CSV::Module) # ... register_loader!(:csv, [(:CSV, "336ed68f-0bac-5ca0-87d4-7b16caf5d00b")])

Compared to the form in the aforementioned PR, this allows for:

- A single loader to produce multiple types

- Multiple modules to be used

- The

Base.openmethods to remain closer to their usage, we can separately define aBase.openmethod that determines the rightloadmethod to call. - All the supported loader forms to be more easily inspected

The decision to split layer::DataLayer{:csv} into loader::Val{:csv} and

parameters::Dict{String, <:Any} may seem arbitrary, however is done to make the

named loader and anonymous loaders’ method signatures closer.

2.4.2. Anonymous loaders

While named loaders exist for general cases, sometimes there are significant computational or ergonomic gains that can be made by having bespoke loaders for individual data sets. It does not make sense for these to be named, as they are likely used only once.

This may seem like a contrived hypothetical, however I have personally encountered exactly these scenarios in the past. We could simply ask users to just grab the path the data and mix their data-loading code with their data-processing code — however we can do better.

Since include("?.jl") returns the final statement in the file, an anonymous

loader can simply consist of a file that returns a function supporting the load method

seen earlier, with two modifications

- No loader name parameter is needed (for obvious reasons)

- No module keyword arguments will be allowed for, as we can reasonably expect that any modules used will have already been loaded by the project.

The following example would thus be a near-minimal anonymous loader.

julia

function (source::IO, as::Type{UInt8}; parameters{String, <:Any}=Dict()) read(source, 1)[1] end

To support this, we will reserve the loader name custom.

2.5. Data dependencies

Often, in the process of analysing/processing some data, more data is created.

We can represent the relation between data sets as a DAG, for instance here’s one from my work:

A derived data set could be constructed via a bespoke loader, with the other data sets as additional inputs and provided as keyword arguments (like modules).

Ideally, we could ask for any data set and it would be lazily constructed as needed from its dependencies. There already exists a Julia library (Dagger.jl ) which lazily executes computations as a DAG, and parallelises them when possible. If this can be applied nicely here, it could allow us to add a lot of functionality with comparatively little effort.

It’s also worth explicitly noting that with a git annex storage driver, thanks to the ability to prioritise storage locations, results that have been computed on another machine could be fetched automatically — isn’t that neat!

2.6. Writing data

Producing intermediate data will require support for writing. I think we can consider a writer to be a mirror to a loader.

\begin{align*} \text{Storage (Data)} &\xrightarrow{\text{loader}} \text{Julia Object (Information)} \\ \text{Storage (Data)} &\xleftarrow{\text{writer}} \text{Julia Object (Information)} \end{align*}I’m quite attracted to this interpretation, as it means that writer can be structured near-identically to loaders. It gives both us and the user less to think about.

julia

write(writer::Val{:csv}, destination::IO, from::DataFrame; parameters::Dict{String, <:Any}, CSV::Module) # ... register_writer!(:csv, [(:CSV, "336ed68f-0bac-5ca0-87d4-7b16caf5d00b")])

We also get support for bespoke/anonymous writers for free, which is rather handy when constructing data dependencies.

2.7. Caching

It’s entirely realistic that a bespoke data loader could go to great pains to reformulate the data source into something much easier to work with2, or that an esoteric format may naturally takes a long time to be processed into a Julia object.

In many cases, I expect loading a dump of the Julia object to be faster than reparsing the data source.

These situations make a compelling case for caching support. Thanks to the exitance of packages like JLD2, this should be fairly easy to implement. Thanks to the ability to prioritise storage locations, there’s a good chance this can be implemented as a top-priority filesystem store, with a writer that writes to that location. As such, caching wouldn’t be directly supported by the specification, but possible using the existing components of it. I find this to be an attractive option.

We may have to have this as an explicit feature though. The hard part (as always) is cache invalidation. Particularly in the case of bespoke loaders, a change to the loading function may well change the resulting Julia object. This can be resolved by including the hash of the file defining the bespoke loader, in addition to information on the data dependencies (if any).

Another approach we could take is defining a cache loader/writer. If we supply the entire data object it could compute hashes etc. automatically, without needing funky arguments. I feel that this may well be the best option.

Only certain results are cacheable though. There should either be a list of non-cacheable types (e.g. IO, Function), or loaders should supply cachability information with the result.

2.8. Chained loaders

Conceptually, taking a .csv file and running CSV.read on it is rather simple.

What about taking an encrypted, compressed, .csv file and then:

- Unencrypting it

- Decompressing it

- Parsing it with CSV.File

I’d argue that we’ve just created three new derived data sets from the original data:

- The data, unencrypted

- The data, unencrypted and decompressed

- A

DataFramerepresentation of the unencrypted, decompressed data

There are two approaches we could take here:

- Allow each data set to have multiple loaders, which feed into each other

- This would require expanding the loader syntax

- Treat each transformation of the data as a new data set

I am inclined towards the second approach for a number of reasons, both conceptual and practical.

- “It’s all the same data” feels like sweeping the problem under the rug

- Treating each transformation as a new data set can already be cleanly expressed with data dependencies

- It’s conceivable that some of these layers may be slow, and having each layer be a data set allows for easier caching

The glaring downside of the second approach is that the data specification will be a tad more complicated, however I think this can be near-entirely mitigated by tooling.

2.9. Layers of data sources

It’s tempting to say that all data referenced should reside in a single specification file (encapsulation is good), however there are some eminently reasonable situations in which this would be needed. For example:

- The team you’re working with has a project data collection, and you want to try a spin-off where you take that data collection and add a few data sets of your own.

- A useful collection of data sets should be able to be published as a package and imported, making their data sets availible without having to resort to glorified copy-paste.

One solution to this would be to support a stack of data collections. Furthermore, by adding a name parameter to a particular collection (given in the specification file or set manually when loading) references to a data set by the same name in multiple collections can be disambiguated. I’m inclined to say that any reference to a data set should have the name parameter supplied, with the exception of the “base” layer of the data stack.

This should easily allow for data packages like RDatasets to be reproduced under this framework.

2.10. Data set identification string

It would be nice to have a convenient way to refer to a dataset. I suggest the following syntax (with optional components enclosed in square brackets):

The @VERSION tag should also support a special @latest form to refer to the most recent version available.

By explicitly constructing this syntax, we can unify the manner in which we refer to a data set across this framework, in the specification file and in code.

One interface to this could be via say a data"" macro, e.g.

data"demo#59e6fa4962523389"data"demo@v0.1"data"RDatasets:datasets/iris"

2.11. A common store

A data set may well be reused in multiple projects. Having n copies has the potential to waste a lot of space if the data sets are large. Having a central data store allows for a single copy to be shared across each project.

This introduces an additional complication though, as obsolete data files can accumulate, taking up more and more space. To avoid this issue, some form of data store garbage collection must be implemented. In order for this to be viable, the store needs to possess a certain amount of metadata on the items stored within it.

![Menu -> Manage -> [Optimize space usage, Encrypt disk usage report, Convert photos to text-only, Delete temporary files, Delete permanent files, Delete all files currently in use, Optimize menu options, Download cloud, Optimize cloud , Upload unused space to cloud] Disk Usage](https://imgs.xkcd.com/comics/disk_usage.png)

At its simplest, we could have a list of data specification files contributing to the store. Any files in the store which have no connections to any of the data specification can be presumed to be garbage and removed.

We could go for something a bit richer, and give the data store it’s own data manifest with information on each file, enumerating the data specifications which refer to the data. This allows for garbage collection in the same manner as the “simple” option, but also provides a few more possibilities — such as listing which data specifications are responsible for the largest files in the store. I am inclined to go with this approach.

2.12. Core functionality vs. plugins

So far, I have outlined a lot of the functionality I think a good data management system should have, more consideration is warranted for how such functionality should be supported.

Ultimately, we want a system that not just supports the situations I have in mind, but situations I haven’t even conceived of. Of course, the extent to which we can consider currently inconceivable usecases is rather limited — but we can try to make a system which is a flexible as reasonably possible. To encourage this, I will endeavour to isolate the essential core of the overall system discussed and then design the rest of the features as extensions/plugins. It is my hope that doing this will result in two major benefits:

- A clearer vision and implementation of the foundational functionality

- A more capable plugin system, with better potential to be applicable to situations I have not thought of

The kernel of the ideas presented here is the relation between information and data. Stored somewhere we have data, bits strung together. We want to work with information, the semantics of the data. Here, we have devised a way of flowing between these forms.

This is the haecceity3 of this framework. Let us thus try to build the prototype system as a collection of such relations, and a plugin system powerful enough to add everything else on top of that.

3. Designing the Data.toml

3.1. Information about the collection

For starters, we don’t want to accidentally have to deal with format incompatibilities, should we realise we made a mistake in future. A config version is thus required.

To help enable data layers, we should add a name parameter, and may as well add a uuid to provide an escape from name collisions.

This feels like the bare essentials to me. Further information can be organised under a data key (preventing users from creating a data set called data, a small loss in my mind).

3.2. Default values

It is already evident that each data set declaration will possess a number of parameters. To avoid needless repetition, we can define a defaults property.

3.3. Data stores

Really, a data store is just a labelled storage location. As such, we should be able to get away with two parameters:

- the name (label) of the data store

- the storage driver used

Additional properties can then be passed as parameters to the storage driver.

3.4. Structure of a data set

In this section we will use the Iris data set as an example, constructing a specification which loads it from a cached copy if it exists, and from a URL if not.

This should be formalised further if the ideas here are taken further, but for now this is largely “specification by example”.

The details discussed here go beyond the idea of core functionality introduced in 2.12. This is done deliberately to flesh out what the system would look like in usage, which helps with the design of the core.

3.4.1. Metadata

Information about the data not specific to a particular storage driver, loader, or writer should be recorded centrally.

From our prior contemplation, the list of properties includes:

- the data set name

- a description of the data

- a UUID

- the hash, version, or datetime of the data

- input data sets

toml

[[iris]] description="Fisher's Iris data set" hash="6b973afd881a52aa180ce01df276d27b7cd1144b" uuid="afcd7e9c-9f34-4db2-aa02-7ec0554639ee"

We can provide input data sets as a table, with the keys being the keyword argument name they should have when being provided, with common data set identification strings as the values.

toml

# Say that Fisher had collected the data on each species individually # and we needed to put them together... inputs={setosa="setosa@v1::Matrix", virginica="virginica#06d36877fcf35776::Matrix", versicolor="versicolor::Matrix"}

3.4.2. Storage

Each storage option must have a driver specified. We have already discussed the benefits of supporting a priority attribute. In order to match up storage providers with loaders, it would be good to know how the storage location can be accessed. This can be specified as a number of Julia types, using a supports attribute. I will also add an id attribute so we can refer to a particular store in a writer later. All other attributes will be treated as keyword arguments for the driver.

toml

[[iris.storage]] driver="filesystem" supports=["DataSets.CacheFile"] path="@__DATA__.jld2" id="cachefile" priority=1 [[iris.storage]] driver="url" supports=["DataSets.File", "Core.IO"] path="https://raw.githubusercontent.com/mwaskom/seaborn-data/master/iris.csv" priority=2

3.4.3. Loader

All loaders should have two attributes:

- The type of the loader

- The Julia type(s) the loader can provide

All other attributes can be considered parameters of the loader.

Let us now go through three example loaders.

In the first instance, we have a loader which simply reads from a cache file whenever available.

Now we have a second loader, also providing a DataFrame. Since the cache loader is fed by the highest priority storage location, this loader will implicitly have a lower priority and be used if no cache is availible. Perhaps loader priority should be explicit? This seems like it could be a good idea.

Lastly we have a custom loader which can provide a NamedTuple or Vector, so

should the user ask for those types this will also be used. A hash is also

needed for a custom loader to detect changes in the loader file.

toml

[[iris.loader]] driver="custom" supports=["Core.NamedTuple", "Base.Vector"] path="loaders/iris.jl" hash="23898c818240732a4ef245957d0793e53ffe5eae"

3.4.4. Writer

As discussed earlier, we can consider writers as a mirror to loaders. This means they will operate in much the same manner, but it seems like a good idea to add two extra attributes:

- auto, whether writing should happen automatically or only when explicitly invoked

- storage, the storage location to use

As an alternative to a storage id, it should also be possible to declare a new storage location.

3.4.5. Manifest

With the option of using non-exact data sets versions, it’s important that the exact versions of any inputs be recorded. This could be a hash of the various parameters of each data set used, along these lines.

toml

[[iris.manifest]] "setosa@v1::Matrix"=0xbd76999238a95e9e "virginica#06d36877fcf35776::Matrix"=0x119455cca367e5e1 "versicolor::Matrix"=0x882bcd21f01a8742

Supporting the name of the data set here and in the inputs is nice for human-readability, but when this is being generated really the UUIDs should be used.

4. API

4.1. Types

DataCollectionDataSetDataStoreAbstractDataTransformerDataStorage{:driver}DataLoader{:driver}DataWriter{:driver}

4.2. Functions

TBD

4.3. A data REPL

The REPL should exist to make common operations as convenient as possible, but should not provide any functionality inaccessible via the API.

It’s too early to work everything out, but we can consider some common situations and what a good experience could look like.

4.3.1. Creating a new data set

4.3.2. Downloading a data set from the a URL

When you know which storage driver you want to use,

At this point we could immediately start loading the data. If that finishes soon we can use the result to help make more informed guesses, otherwise we should move on and start collecting more information.

data> get url https://raw.githubusercontent.com/mwaskom/seaborn-data/master/iris.csv Downloaded iris.csv (3.8 KiB) data set name: iris loader(csv): version(none):

Since the file is small, the hash should be automatically recorded.

4.3.3. Loading a local file as a dataset

4.3.4. Creating a custom loader for a dataset

4.3.5. Removing a data set

4.3.6. Listing the data sets specified

4.3.7. Creating a derived data set

4.3.8. Looking at a data store

4.3.9. Checking the validity of the Data.toml

4.3.10. Writing out some data

5. Example Data.toml

toml

name="example" uuid="83d40074-8c69-46f0-b65d-e8f12dd83ead" data_config_version=1 data.plugins = ["integrity"] [data.defaults] store="global" recency=-1 [[data.store]] name="global" driver="filesystem" path="@__GLOBALSTORE__" [[data.store]] name="local" driver="filesystem" path="@__DIR__/data" # individual data sets now [[iris]] description="Fisher's Iris data set" hash="6b973afd881a52aa180ce01df276d27b7cd1144b" uuid="afcd7e9c-9f34-4db2-aa02-7ec0554639ee" store="local" [[iris.storage]] driver="filesystem" path="@__DATA__.jld2" id="cachefile" priority=1 [[iris.storage]] driver="url" path="https://raw.githubusercontent.com/mwaskom/seaborn-data/master/iris.csv" priority=2 [[iris.storage]] driver="s3" path="s3://eu-west-1/knime-bucket/data/iris.data" priority=3 [[iris.loader]] driver="cache" supports=["DataFrames.DataFrame"] [[iris.loader]] driver="csv" supports=["DataFrames.DataFrame"] parameters = { delim = "," } [[iris.writer]] driver="cache" auto=true storage="cachefile" [[mydata]] description="My data that isn't available anywhere else" version="0.1.0" uuid="5ddbafb0-c5be-4096-b07b-a86bd5da600e" [[mydata.storage]] driver="filesystem" path="@__DIR__/mydata.tsv.gz" [[mydata.loader]] driver="gzip" supports=["Core.IO"] [[mydata]] description="My data that isn't availible anywhere else" inputs=["5ddbafb0-c5be-4096-b07b-a86bd5da600e@latest::Core.IO"] uuid="ab30c83c-11a7-4fd5-94e1-4b4305e54a80" [mydata.manifest] "5ddbafb0-c5be-4096-b07b-a86bd5da600e@latest::Core.IO"="aa33b9ffb3a79363" [[mydata.loader]] driver="custom" supports=["Base.Dict"] params="mydataconfig" path="loaders/mydata.jl" hash="97d170e1550eee4afc0af065b78cda302a97674c" [[mydata.writer]] driver="hdf5" [[mydata.writer.storage]] driver="filesystem" [[mydataconfig]] description="Configuration for loading mydata" uid="27cccb25-d9b7-45a2-9383-bb90e52317e1" [[mydataconfig.loader]] driver="raw" supports=["Dict"] data={some="value"} [[recentweather]] description="recent weather information" uid="7cbd6dda-f53b-454a-927c-6cdbd61c181b" [[recentweather.storage]] driver="url" path="blah" recency=604800

Footnotes:

Not Invented Here, a tendency to “reinvent the wheel” to avoid using tools from external origins — it would of course be better if you (re)made it.

I have written one such loader, that takes most of a day to parse the data source into a useful structure, but produces a .jld2 dump that can be loaded in under a minute.

The aspects of a thing that make it a particular thing, the irreducible individuating differentia and quiddity4 which define it.

The universal qualities of a category that items within the category share.